Q. What should I do if my Agent Desktop is freezing or lagging?

A. If you’re experiencing freezing or lag within Agent Desktop, follow these troubleshooting steps:

- First, check your network connection. This applies whether you’re working in the office or remotely.

- Ensure your network strength meets Kore’s defined parameters. Refer to Minimum System Requirements and Supported Browsers.

- Once you’ve verified your network connection, perform User Diagnostics.

Q. Which context variable helps to identify the incoming call number?

A. The Caller and Callee numbers can be identified using:

context.BotUserSession.channels[0].handle

Below is an example of its usage in an experience flow:

setCallFlowVariable(‘caller’, context.BotUserSession.channels[0].handle.Caller);

var caller = getCallFlowVariable(‘caller’);

userSessionUtils.put(‘Caller’, caller);

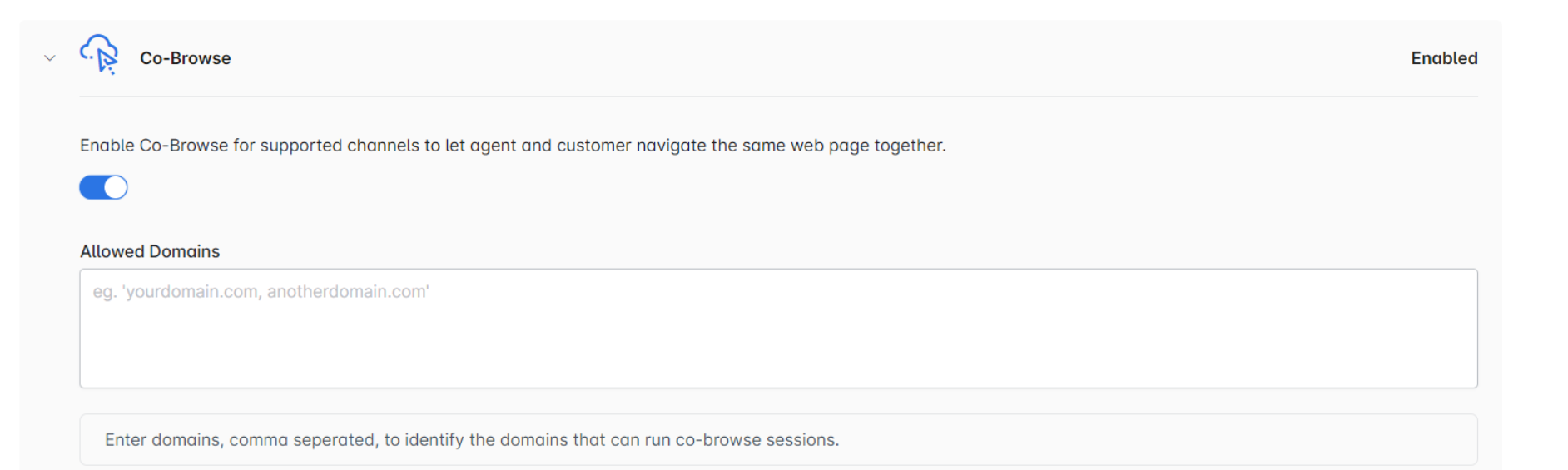

Q. After enabling the Co-Browsing feature on the Agent Desktop no popup or request appears on the end user chat window. It just waits for 60 seconds and then closes. Are any additional settings required to make the Co-Browsing feature function as intended?

A. The source URL where your web chat is deployed must be added in the Allowed Domains. It is essential to ensure that the copy of the web SDK supports Co-Browse.

Q. How do you set up skills-based routing?

A. Before transferring the interaction, we must set the javascript variable below in the agent deflection dialog. The ID generated during skill creation in SmartAssist is used in the setSkills function to set the userType.

You can use the agentUtil functions setUserInfo and setSkills to pass user information to agents and set the required skills for skill-based agent routing. Call these functions as needed before transferring the agent using the SAT_AgentTransfer node.

setUserInfo

var userInfo = {

“John Doe”: {

“firstName”: “John”,

“lastName”: “Doe”,

“email”: “John@kore.com”,

“phoneNumber”: “9951523523”

}

};

var user = “John Doe”;

agentUtils.setUserInfo(userInfo[user]);

setSkills

var userType = {

“incident”: {

“id”: “618262f8ebf0721c083721cb”,

“name”: “Incident”

}

}

agentUtils.setSkills([userType[“incident”]])

Q. What util functions are available to be used in experience flows?

A. You can use a variety of functions to create experience flows. Some of these functions include:

Function for dynamic Transfer SIP:

agentUtils.setTransferSipURI( {{sip URI}} )

Function for dynamic Transfer Phone Number:

agentUtils.setTransferPhoneNumber( {{phoneNumber}} )

Function for dynamic Transfer Target:

agentUtils.setTransferTarget(“sip:+12345@telnyx.dummy.com“);

Function to Set a Bot Language:

agentUtils.setBotLanguage({{langCode }})

Function to Set the Priority:

agentUtils.setPriority(priority<Number>)

Function to Set the MetaInfo:

agentUtils. setMetaInfo({{Metainfo}})

Function to Set the UserInfo:

agentUtils. setUserInfo({{Userinfo}})

Function to Set a Queue:

agentUtils. setQueue({{Userinfo}})

Function to Set Skills:

agentUtils.setSkills({{skill}])

You can combine these functions to create dynamic and engaging user experience flows.

Q. Does SmartAssist provide a context object to view the first user message?

A. Add the following context in the split task to check the first input message and route the conversation based on the first user message. You can use this context object to understand the user intent, context and route the conversation accordingly.

{{context.BotUserSession.lastMessage.messagePayload.message.body}}

Q. How to access the caller’s ANI (calling phone number)?

A. You can access the caller’s ANI through the context variable: {{context.session.BotUserSession.channels[0].handle.Caller}}

Include the country code: “+15554441234”

SmartAssist V1

The Caller number is directly available in the bot context at {{context.session.UserSession.Caller}}

SmartAssist V2

In V2 SmartAssist, before conversational input (before Automation, if no conversational input)

Add a script node with the below script:

setCallFlowVariable(‘caller’, context.BotUserSession.channels[0].handle.Caller);

var caller = getCallFlowVariable(‘caller’);

userSessionUtils.put(‘Caller’, caller);

Then Caller number is directly available in both contexts at:

{{context.session.UserSession.Caller}}

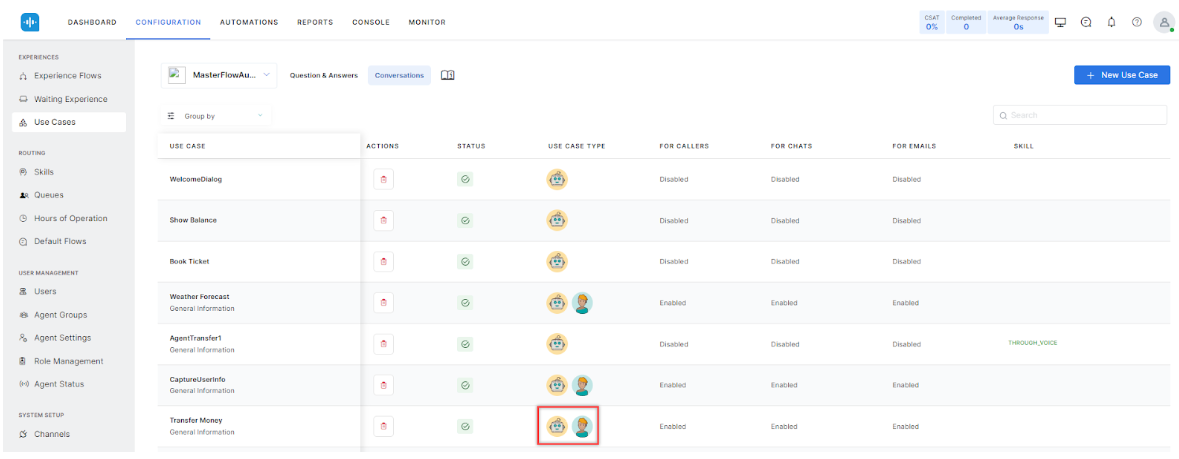

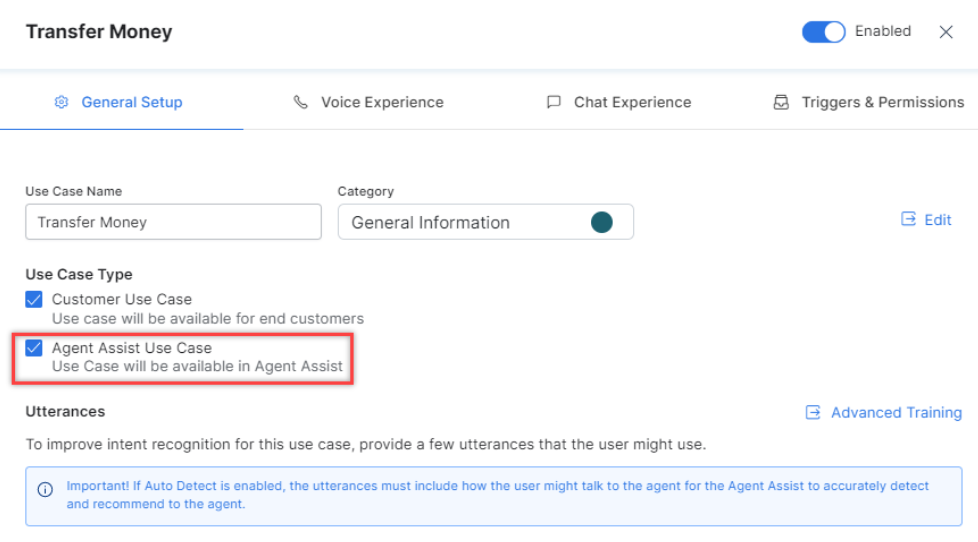

Q. How to configure AgentAssist automated suggestions?

A. You can configure automated suggestions from the SmartAssist ‘Use Cases’ configuration section. If you enable them for AgentAssist, an agent icon will appear next to your automations.

To configure automated suggestions, click the Use Case, and the configuration screen appears. Select Agent Assist Use Case and save your changes. Publish your automations in the Bots Builder view. Skipping this step prevents the changes from taking effect.

Q. What languages does SmartAssist Support?

A. SmartAssist uses the language support of the Kore.ai XO Platform.

This support includes language-agnostic NLP models, allowing you to engage with customers in over 125 languages.

You can train these models in any language of your choice. Additionally, the platform allows you to create language-specific models for over 25 popular languages.

Stay informed about updates because the platform continuously adds new language support.

Q. How can SmartAssist be integrated with third-party systems?

A. SmartAssist utilizes the APIs, SDKs, and connectors the XO Platform provides for seamless integration with third-party systems.

You can control the flow of data and personalize the user experience. Additionally, our SDKs allow organizations to embed virtual assistants into iOS and Android apps or the web.

You can easily customize the appearance of SmartAssist. Learn more.

Q. Is it possible to share call recordings via SMS where recipients can simply click a link to listen to the recording?

A. While there is an API available to retrieve call recordings, sharing them via SMS isn’t currently supported. This limitation is in place to ensure the controlled access of call recordings, which may contain Personally Identifiable Information (PII) or other confidential data.

Q. Is the data provided by the SmartAssist API for queue size and agent availability live (real-time), or is there a delay?

A. The data provided by the SmartAssist API for queue size and agent availability is real-time.

Q. Can we manipulate the Caller ID entry for purchased numbers used in outbound calls within a specific SmartAssist tenant? Is it possible to “spoof” a customer’s number so their Caller ID is displayed when they make calls? Additionally, if a customer has “verified” phone numbers with branding, can we leverage that?

A. No, it’s not possible to manipulate the Caller ID entry for purchased numbers or “spoof” a customer’s number within the SmartAssist system. Leveraging verified phone numbers with branding is not supported for this purpose.

Q. To retrieve the caller number from the SIP header, is it necessary to configure the PBX/IVR to transmit the caller/callee number, or are these automatically included within the SIP header?

A. You need to designate the “from” and “to” fields as the caller and callee respectively from the PBX, and then route the call to Kore. Kore will automatically interpret this information from the SIP header without the need for additional configuration.

Q. Do end-users need to initiate a voice interaction with agents through the web client?

A. No, initiating a voice interaction doesn’t necessarily have to start from the web client. The web client is primarily utilized for receiving OTP input and establishing a connection.

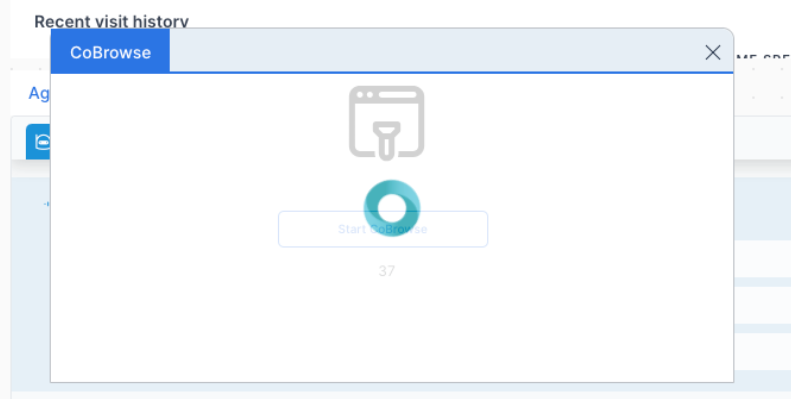

Q. If end-users call a number directly within an experience flow, does Voice Co-Browse function?

A. Yes, Voice Co-Browse functionality is available for numbers that are set up within the experience flow. To initiate Co-Browsing, users need to reach a stage where One Time Password (OTP) input is required, which is facilitated by Web SDK 2.0.

The connection is validated using the Web SDK 2.0 on the website. API details can be provided to allow users to input the OTP and establish a connection, assisting customers in configuring the initiation point for Co-Browsing.

Q. How can I transfer some of my calls to agents on SmartAssist and the remaining ones to an external SIP URL or a phone number?

A. You can do this by adding the following snippet in a message node with “IVR-Audiocodes” channel override at the right place in your conversation flow. This would typically be done right before the agent transfer.

var message = {activities": [

{

"type": "event",

"id": new Date().getTime(),

"timestamp": new Date().toISOString(),

"name": "transfer",

"activityParams": {

"transferTarget":"tel:<add_the _phone_number_here in the E.164 Format>"

}

}

]

};

print(JSON.stringify(message));

If you want to transfer to a SIP URI, replace the transfer target value as "transferTarget":"sip:+123344234232@2.3.4.5:5060"

Q. Are interaction statuses customizable? Do these interaction statuses apply to outbound calls?

A. No, the default interaction statuses are predefined and cannot be altered/customized. Yes, they apply to outbound calls.

Q. How long are calls and chats stored?

A. Call or voice data is stored for a default period of 3 months and chat data is stored for a default period of 7 years.

Q. What languages does the SmartAssist bot support when creating a new account?

A. The SmartAssist bot supports creation in the following languages:

- Arabic

- Chinese Simplified

- Chinese Traditional

- English

- German

- Hindi

- Japanese

- Khmer

- Lao

- Malay

- Polish

- Portuguese

- Spanish

- Telugu

- Thai

- Vietnamese

Q. What languages are supported for the platform interface with SmartAssist?

A. The platform interface with SmartAssist supports creation in the following languages:

- English (United States)

- Japanese (Japan)

- Korean (South Korea)

- Simplified Chinese (China)

- Spanish (Spain)

- French (France)

- Italian (Italy)

Q. Is there an API for creating external contacts for outbound and consult calls?

A. You can create an external contact using the ‘Create a Contact‘ API and provide the required details. Additionally, you can use the ‘Create Bulk Contacts‘ API to update a list of external contacts from a CSV file.

Q: How can bot developers leverage ASR confidence levels within XO Contact Center for refining bot interactions?

A. XO Contact Center uses Automated Speech Recognition (ASR) engines to convert voice input to text. When an ASR engine processes a voice stream, it generates multiple interpretations, each assigned a confidence value. These engines identify the most accurate interpretation by considering a combination of factors.

Bot developers can use these confidence levels to adjust bot flows by incorporating ASR confidence values into the conversation context. For example:

If the ASR’s confidence level for an entity falls below a predetermined threshold, the bot can re-prompt the customer again for clarification.

This can be done as follows:

- Bot developers can write a bot function to abstract the ASR confidence evaluation logic throughout the bot flow.

- The confidence values from the last user input are included in the context object. These values are added by koreVG on receiving a speech-to-text hypothesis. This can be done by using the

lastUtteranceConfidencekey.

Syntax:

let lastUtteranceConfidence = context. session.UserSession.lastUtteranceConfidence

let text = lastUtteranceConfidence.text

let confidenceValue = lastUtteranceConfidence.confidence

The following values are provided from each ASR:

- Text Hypothesis

- Confidence Value

- Additional values in the case of Azure:

- “lexical: i want to buy something,”

- “itn: i want to buy something,”

- “masked_i_t_n: i want to buy something,”

- “display: I want to buy something.,”

- Example 1:

{

"lastUtteranceConfidence": {

"text": "Hello this is ASR confidence test.",

"confidence": 0.77108324,

"n_best": [

{

"text": "Hello this is ASR confidence test.",

"confidence": 0.77108324,

"lexical": "hello this is ASR confidence test",

"itn": "hello this is ASR confidence test",

"masked_i_t_n": "hello this is asr confidence

test",

"display": "Hello this is ASR confidence test."

}

]

}

}

-

- Example 2: For MultipleMessage (In case of Continuous ASR enabled)

{

"lastUtteranceConfidence"

": {

"text": "Hello, this is voice testing This is

continuous, yes, R testing Hello. Hello",

"confidence": 0.8540497166666666,

"n_best": [

{

"text": "Hello, this is voice testing",

"confidence": 0.8890478,

"lexical": "hello this is voice testing",

"itn": "hello this is voice testing",

"masked_i_t_n": "hello this is voice testing",

"display": "Hello, this is voice testing."

},

{

"text": "This is continuous, yes, R testing",

"confidence": 0.6974927,

"lexical": "this is continuous yes R testing",

"itn": "this is continuous yes R testing",

"masked_i_t_n": "this is continuous yes r

testing",

"display": "This is continuous, yes, R

testing."

},

{

"text": "Hello. Hello",

"confidence": 0.97560865,

"lexical": "hello hello",

"itn": "hello hello",

"masked_i_t_n": "hello hello",

"display": "Hello. Hello."

}

]

},

"orgId": "o-88aad7f1-0d32-5765-93d7-f40c8040xxxx"

}

- Example 2: For MultipleMessage (In case of Continuous ASR enabled)

- The confidence data from the last processed nodes is included.

- This is applicable for bots configured with KoreVG.

Q: Are end-to-end conversations between the voice bot and user, and after-agent transfer between the user and agent?

A: SmartAssist records end-to-end conversations, including interactions between the voice bot and the user, and conversations after agent transfer. Click the next button to listen to the recording after the agent transfer. Learn more.

Q. How is re-routing handled when a conversation is transferred between agents or queues?

A: It depends on the type of transfer:

- Agent-to-Queue Transfer: When a request is transferred to a queue, it is added to the target queue, and the routing process continues among the agents in that queue.

- Agent-to-Agent Transfer: When a request is transferred directly to an agent, it is assigned to the specific agent. If re-routing occurs, the routing will be handled among agents from the source queue, not the target agent’s queue, as the target agent may belong to multiple queues. Learn more.

Q. How long do I need to wait for the arrival summary to be generated?

A: The arrival summary takes approximately 7 seconds, depending on the length of the conversation.

Q. How long do I need to wait for the disposition summary to be generated?

A: The disposition summary takes approximately 15 seconds, depending on the length of the conversation.

Q. What causes the delay in summary generation?

A: Three main factors contribute to summary generation delays:

- Conversation length (for both chat and voice interactions),

- Multiple simultaneous user conversations,

- Complexity of conversational language.

Q. Do I need to wait for the disposition summary to be generated?

A: Yes, agents must wait for the disposition summary to be generated before supervisors can view it in the Interactions tab.

Q. Why is there a delay when transferring from the bot to an agent?

A: The delay is typically caused by the time required to generate the landing summary.