Introduction

This document explains the integration steps between ID R&D and the Kore platform. It details the functionality of ID R&D and offers step-by-step instructions on effectively using this integration while constructing conversational flows.

Prerequisites

You need to enable ID R&D for your account to use ID R&D with a Kore bot.

Integration Architecture

ID R&D offers the following core functionalities:

- Generate voice templates from voice files,

- Compare voice templates to identify a probable match.

Kore manages all other operational aspects, including access governance, performance management, deployment, and maintenance. Consequently, due to performance and security considerations, ID R&D functions as an independent service within the broader Kore architecture. Kore has developed additional scaffolding to integrate this service into our platform.

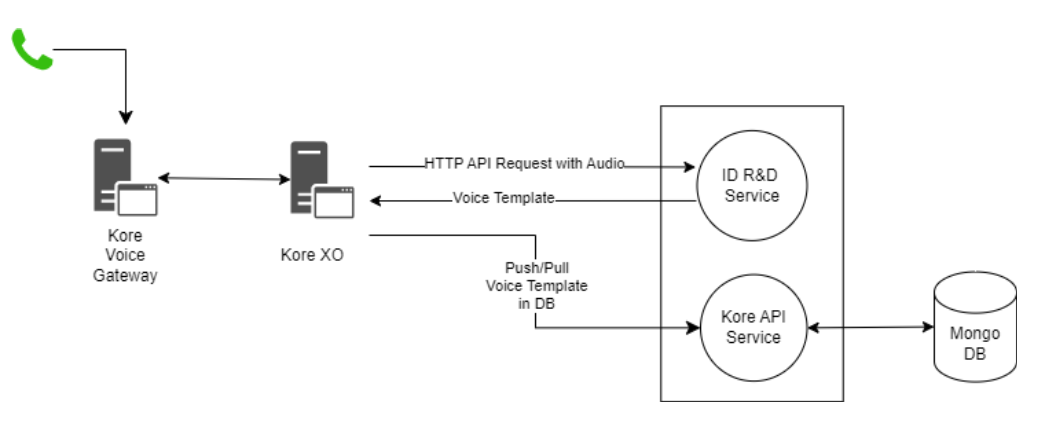

The diagram below describes this integration at a very high level:

The Kore Voice Gateway manages calls coming into the Kore Contact Center, which are then forwarded to the separately deployed ID R&D instances to generate voice templates.

Interaction Flow

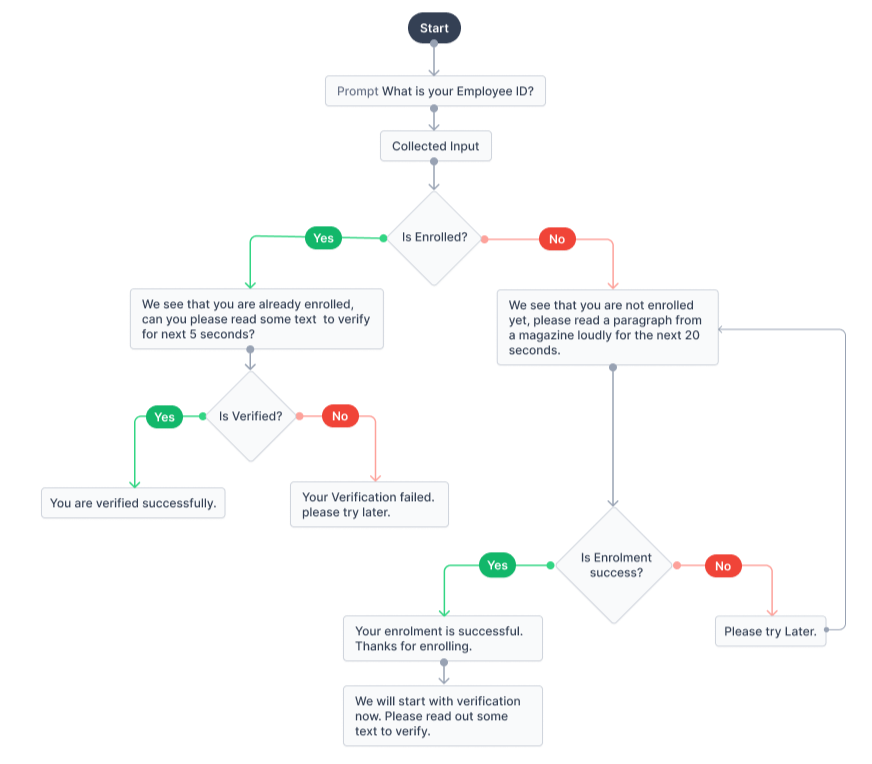

The diagram below illustrates a sample interaction flow for a voice biometrics use case. Below are the supported methods that a bot developer can incorporate within their bot to implement voice biometrics use cases.

Supported Methods

As part of the integration, developers have access to the following methods. These methods can be used with regular dialogs to build a voice biometric flow.

- Is Enrolled (voiceBiometricUtils.isEnrolled):

- Collect a unique identifier as part of the conversation.

- Search for the unique identifier in the list of registered users, with the bot ID, and organization ID.

- If the unique identifier exists in the database, request verification from the user; otherwise, prompt for enrollment.

- Enrolment (voiceBiometricUtils.enrollment):

-

- Gather voice input from the user.

- Assess the voice input quality

- These values are compared against the predefined thresholds in the configuration to perform a voice quality check.

- If the quality check indicates that more data is required, the same response is shared as a response code to the function call. Bot developers need to ensure that they handle this scenario in their conversation design. determine if more data is required.

- Construct a voice template using the audio file and the identifier collected in the previous step.

- This action yields an encoded string (voice_template) linked to the identifier from step 1.

- The voice template is stored along with the bot ID, organization ID, and unique customer ID.

- Verification (voiceBiometricUtils.verification)

-

- Verify the existence and enrollment status of a user ID.

- Replicate the steps for collecting user voice input from the enrollment process for a live call, and generate a voice template. However, this voice template is not retained in the database.

- Use the voice template created in step 2 and stored against the user, and dispatch both to ID R&D using the match_voice_templates API.

-

-

- This API returns a match score and a probability, which are evaluated against the values configured in the system.

-