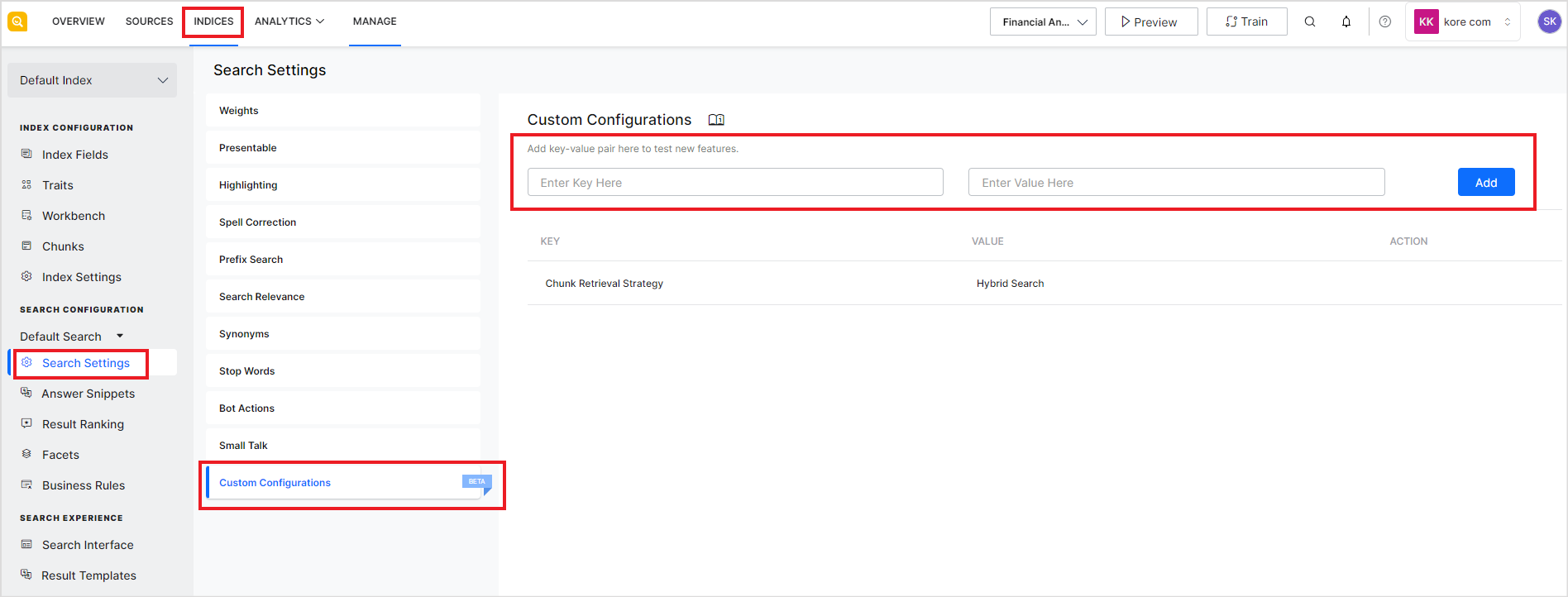

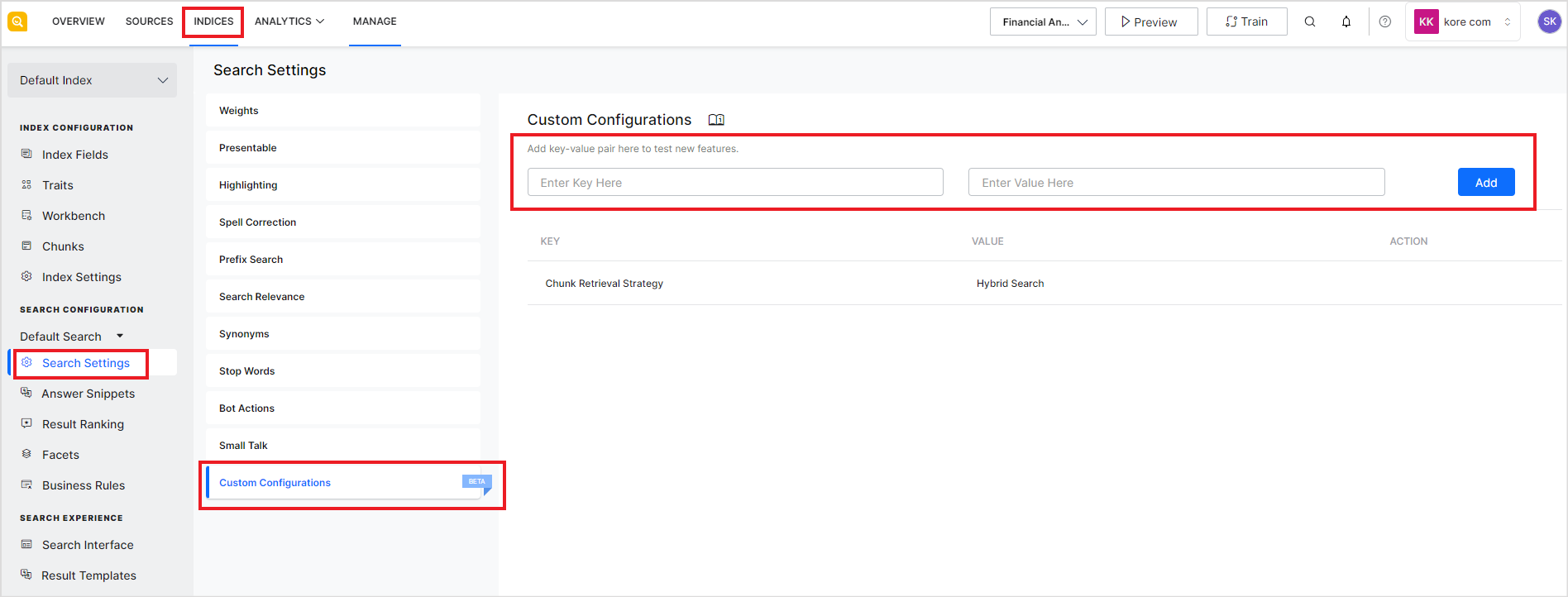

SearchAssist enables customization and optimization of the application’s behavior through advanced configuration parameters. These advanced configurations can be set or updated using the Custom Configurations page under the Search Settings in the Indices tab.

The advanced configurations are available as key-value pairs. The key represents the configuration parameter that needs to be updated. To enable a new configuration, enter the corresponding key and its value.

For example, to introduce a delay between a web page’s loading and the initiation of its crawling, you can use the key ‘dev_enable_crawl_delay’ and set its value to a number between 0 and 9, which indicates the delay interval in seconds.

Note:

- A configuration, wherever applicable, applies to all the content sources, chunks in the application, or answers generated from the application. A configuration cannot be set selectively.

- The key values are case sensitive.

Below is the complete list of configurations available for a SearchAssist application.

Rerank Chunks

| Data Type: ENUM |

Default Value: NA |

Training Required: No |

For answer generation, relevant chunks are initially retrieved using the similarity score between the user query and the chunks. The re-ranking of chunks further boosts the relevance of the top chunks to the specific query. Use this key to enable re-ranking and to select the model for reranking. It can take one of the following values:

- minilmv2: A fast, multilingual model trained in 16 languages. It excels in efficient processing of text across multiple languages, making it ideal for applications requiring quick multilingual capabilities.

- electrabase: Provides highly accurate reranking but is slower compared to minilmv2. It prioritizes accuracy over speed in reranking tasks.

|

Rerank Chunk Fields

| Data Type: String |

Training Required: No |

| Default Value: chunkTitle, chunkText, recordTitle |

| This key configures the fields used for generating the chunk vectors while reranking. You can use any of the chunk index fields as the value of this field. This field is used only if the reranking of chunks is enabled. |

Maximum re-rank chunks

| Data Type: |

Default Value: 20 |

Training Required: No |

This key configures the maximum number of chunks to be sent for reranking. This field can take any integer value between 5 and 20. This field is used only if the reranking of chunks is enabled.

Note that increasing the number of fields for reranking typically leads to higher latency due to the added complexity in computation, data retrieval, and the processing load. |

Enable Page Body Cleaning

| Data Type: Boolean |

Default Value: True |

Training Required: Yes |

This key is used to determine whether to clean the page body while crawling a web page. Cleaning a web page aims to remove redundant and less significant sections of the pages while indexing. When this field is set to false, the content in the following HTML tags are removed while indexing:

- script, style, header and footer

When this field is set to true, the following HTML tags are removed while indexing.

- annoying_tags” “comments”, “embedded”, “forms”, “frames”,”javascript”, “links”, “meta”, “page_structure”, “processing_instructions”, “remove_unknown_tags”, “safe_attrs_only” “scripts”, “style”.

|

Crawl Delay

| Data Type: ENUM |

Default Value: 0 |

Training Required: Yes |

| Rendering of pages can take time, depending on the amount of content rendered through JavaScript. This key introduces delay between the loading of a page and its crawling allowing the source page to completely load before the crawling begins. This field can take any integer value between 0 and 9. By default, this field is set to 0, indicating no delay. You can set a maximum delay of 9 seconds. |

Chunk Token Size

| Data Type: Number |

Default Value: 400 |

Training Required: Yes |

| This key facilitates the creation of chunks with the specified number of tokens when using text-based extraction strategy. This field can take integer values. |

Chunk Vector Fields

| Data Type: Enum |

Default Value:chunkTitle, chunkText, recordTitle |

Training Required: Yes |

| This key is used to identify the fields used for vector generation for a chunk. Go to the JSON view of a chunk to view the various fields available for a chunk. |

Rewrite Query

| Data Type: Enum |

Default Value:query |

Training Required: No |

Query Rewrite is the process of modifying a search query to enhance the accuracy or relevance of the search results. It involves using contextual information to enable query rewriting and selecting the appropriate context information for the rewrite.”SearchAssist uses OpenAI for query rewriting. This key can take the following values:

- query – sends the last five user queries to LLM as context.

- conversation – sends the last five user queries and corresponding answers to LLM as context.

Example:

- Query-1: what is Paytm?

- Query-2: How to invest in mutual funds?

- Rephrased query: How do you invest in mutual funds through Paytm?

- Query-3: Create an account

- Rephrased query: How do you create an account in Paytm?

|

Hypothetical Embeddings

| Data Type: Boolean |

Default Value: false |

Training Required: No |

| This key enables hypothetical embeddings for user queries. The query is sent to an LLM without chunks to generate a hypothetical answer. Then the query and the corresponding hypothetical answer are used to create embeddings for vector search. To enable this feature, set the key value to true. |

Chunk Deviation Percent

| Data Type: Number |

Default Value:90 |

Training Required: No |

| This refers to the proximity threshold, which determines how close two chunks need to be in terms of embeddings for them to be considered similar or related. When the difference between scores of chunks goes beyond this deviation percentage, the chunk is not sent to LLM for answer generation. You can set this field to any float value between 1 and 100. |

Number of Chunks

| Data Type: Number |

Default Value:5 |

Training Required: No |

| This key sets the number of chunks sent to the LLM for answer generation. It can take any value between 1 and 20, offering more flexibility than the SearchAssist interface, which caps this value to a maximum of 5 chunks. |

Maximum Token Size

| Data Type: Number |

Default Value:3000 |

Training Required: No |

This key sets the maximum number of tokens to be sent to OpenAI while generating an answer. This key can take any integer value. This is the token limit combining the chunks and the prompt sent to the LLM.

The number of tokens sent to the LLM also depends on the chunk size and the number of chunks. Therefore, increasing the Maximum Token Size alone does not increase the total number of chunks sent to the LLM.When modifying the Maximum Token Size, ensure that the values for chunk size and number of chunks are adjusted accordingly to maintain alignment and optimize performance. Misalignment between these parameters can lead to inefficient processing or truncated outputs.

Also, consider the token limits of the LLM model used and the Response Size configured for the application while setting the value for this key. |

Response Size

| Data Type: Number |

Default Value:512 tokens |

Training Required: No |

| This key defines the maximum number of response tokens generated by AI models. It can take any integer number as a value. When setting this value, consider the token limits of the LLM used. |

top_p value

| Data Type: Number |

Default Value: 1 |

Training Required: No |

| By adjusting top_p, developers can fine-tune the model’s output, making it more creative or deterministic based on the application’s needs. Higher values of top_p indicate more randomness and lower values indicate higher coherence. Use this key to set the value of the top_p parameter passed to OpenAI. It can take any float value between 0 and 1 as its value. |

Chunk Order

| Data Type: Enum |

Default Value: Normal |

Training Required: No |

This key can be used to reverse the order of qualified chunks sent to the LLM in the Prompt. The key can take the following values:

- Normal: In this case, the chunks are added in descending order of relevance, i.e. highest relevance to the lowest, followed by the query. For instance, if the top five chunks are to be sent to the LLM, the most relevant chunk is added first and the least relevant chunk is added at the end.

- Reverse: In this case, the chunks are added in ascending order of relevance. The least relevant chunk is added first, and the most relevant chunk is at the end, followed by the query.

The order of data chunks can affect the context, and thereby the results of a user query. The decision to use normal or reverse chunk order should align with the specific goals of the task and the nature of the data being processed. |

Answer Response Length

| Data Type: Number |

Default Value: 150 tokens |

Training Required: No |

| This key allows you to configure the maximum length of the Extractive answers. It can take any integer value. |

Chunk Types

| Data Type: Enum |

Default Value: text, layout |

Training Required: No |

| This field is used to specify the chunk types to be used for generative answers. By default, all the existing chunks are used for answer generation. However, if required, you can choose to use only a specific type of chunk for answer generation. |

Snippet Selection

| Data Type: Boolean |

Default Value: False |

Training Required: No |

| This key enables LLM to choose the best snippet from the selected set of extractive snippets. This is useful for users willing to use LLM(OpenAI) but requiring deterministic answers. When this field is set to true, the top five snippets are sent to LLM to choose the best snippet which is displayed to the user as the answer. |

Chunk Retrieval Strategy

| Data Type: Enum |

Default Value: hybrid_search_legacy |

Training Required: No |

This key is used to select the retrieval strategy for search results and answers. This key can take the following values:

- vector_search

hybrid_search

- doc_search_hybrid

- doc_search_hybrid_legacy

- doc_search_pure_vector

Find more details on different strategies and recommendations here. |

Response Timeout

| Data Type: Integer |

Default Value: 15 seconds |

Training Required: No |

| This key sets the timeout for a response from OpenAI. It can take any value greater than 0. |

Custom HTML Parser

| Data Type: Text |

Default Value: NA |

Training Required: Yes |

This key is used to selectively remove unnecessary content from the HTML page, identified using XPATHs or CSS selectors, during web crawling. The key’s values should be provided as a JSON object. For instance, to exclude the content by a given XPath in all the URLs, set the following value for the key.

[

{

"for":

{

"urls":

[

".*"

]

},

"exclude":

[

{

"type": "XPATH",

"path": "//*[@id=\"newSubNav\"]"

}

]

}

]

For more information on how to use this config, refer to this. |

Prompt Fields

| Data Type: Text |

Default Value: NA |

Training Required: Yes |

| This key is used to selectively send chunk fields to the LLM via the Prompt. By default, SearchAssist sends chunkText and ChunkTitle in the prompt, but using this config, you can send any chunk fields. The values for the key should be provided as a JSON object, where keys are the chunk field names and the values are the corresponding names in the prompt. For instance, the following JSON object can be used to add chunk sourceName and the date of publication to the prompt.

{

“sourceName”: “source_name”,

“chunkMeta.datePublished”: “datePublished”

}

Note: If multiple keys map to the same prompt field, priority is given to the first non-empty value.

For example, from the following JSON, “content” will be the values stored in table_html unless it is empty, in which case it will be the values in the “chunkText” field.

{

“table_html”: “content”,

“chunkText”: “content”,

“recordTitle”: “source_name”,

“sourceName”: “source_name”,

“chunkMeta.datePublished”: “datePublished”

} |

Single Use URL

| Data Type: Boolean |

Default Value: True |

Training Required: No |

| A URL is shared as a citation in the response. For answers generated from files, a link to the file is provided, which can be used to download the file. This key can be used to enable or disable the URL as a single-use URL. When the key is set to True, the URL in the response is a single-use URL, and when this key is set to False, the URL can be used multiple times. |