SearchAssist also provides you with a simulator to test the snippets engine and find the most suitable configuration or model for your business needs. It provides an answer snippet flow analysis both as text and in JSON format. You can enable one or more models, assess the performance of the results to the user queries using the simulator, and choose the most appropriate model.

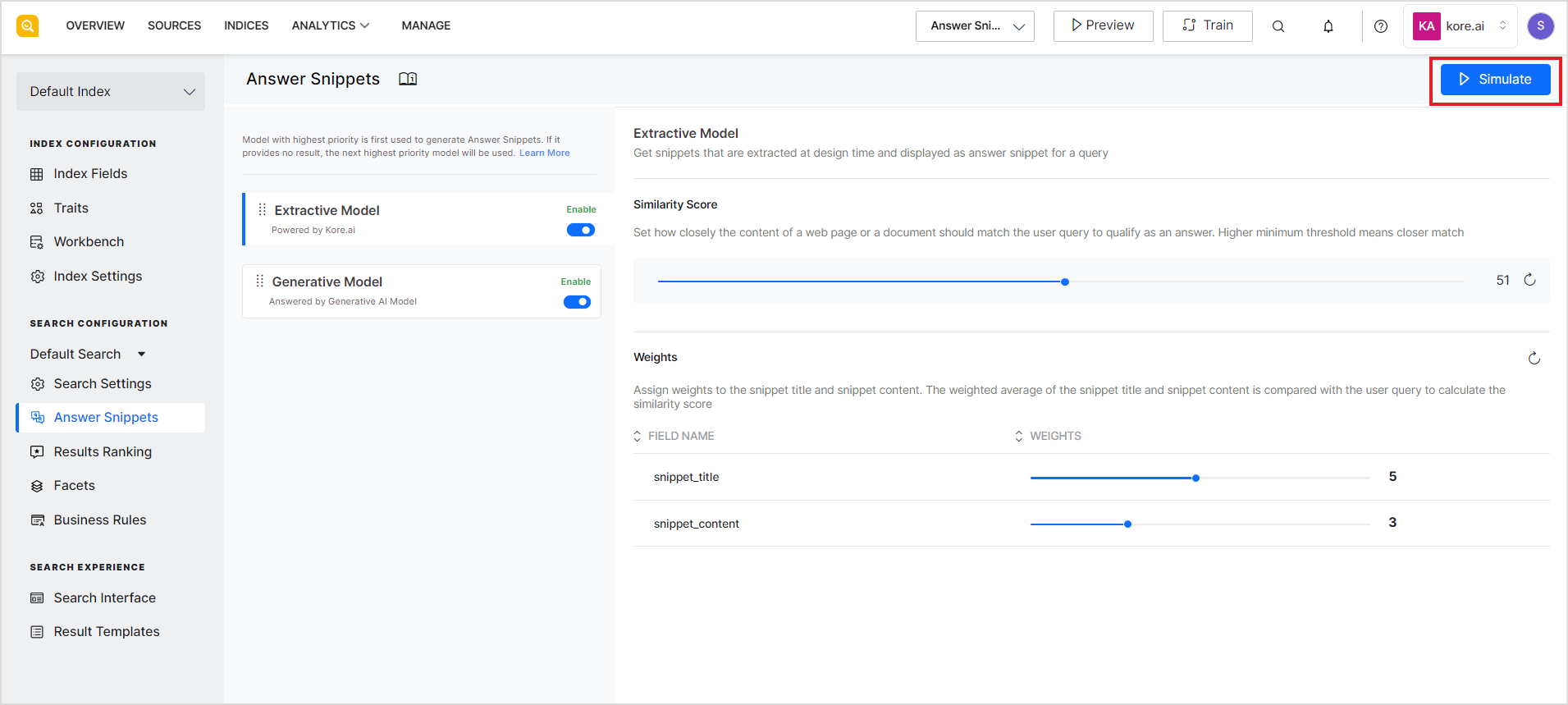

To start the simulator, click the Simulate button on the top right corner of the page.

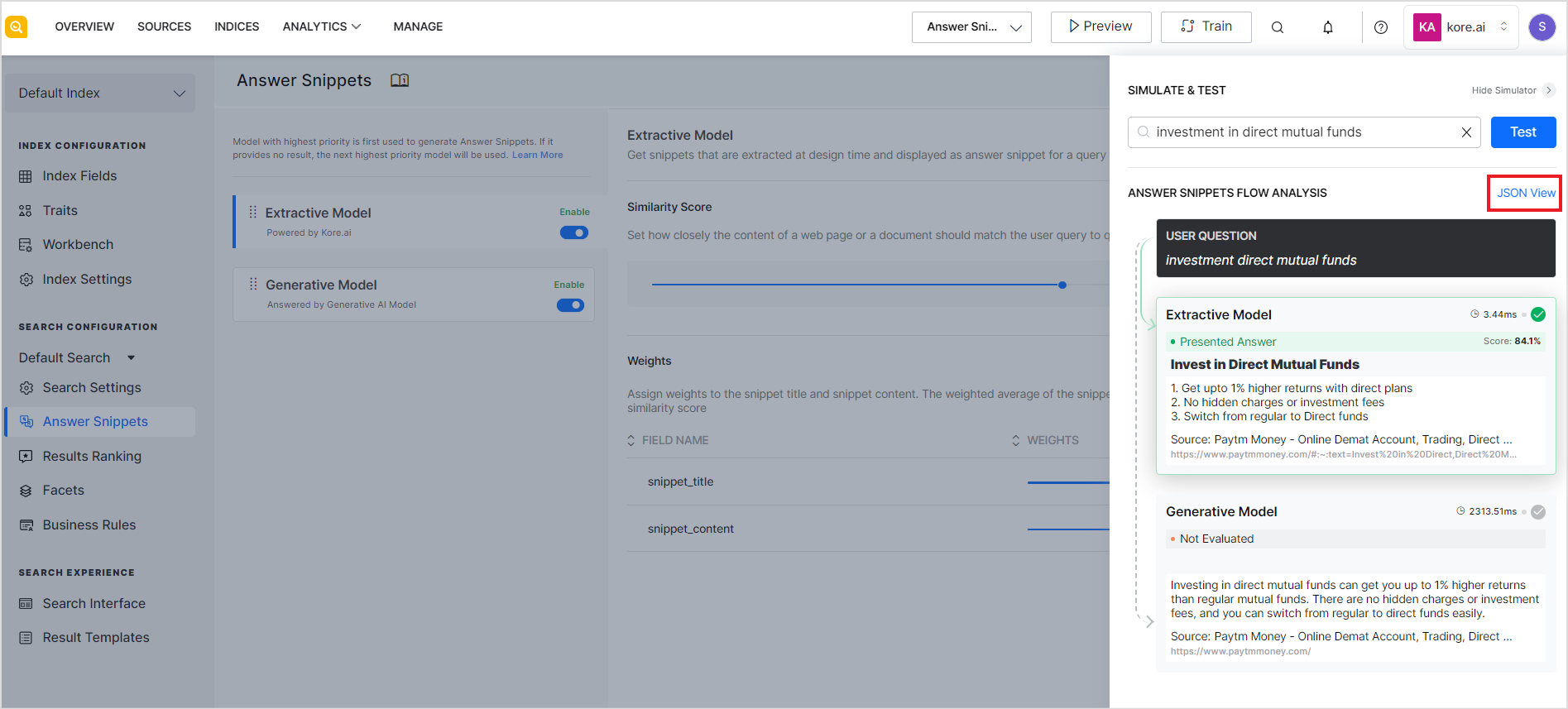

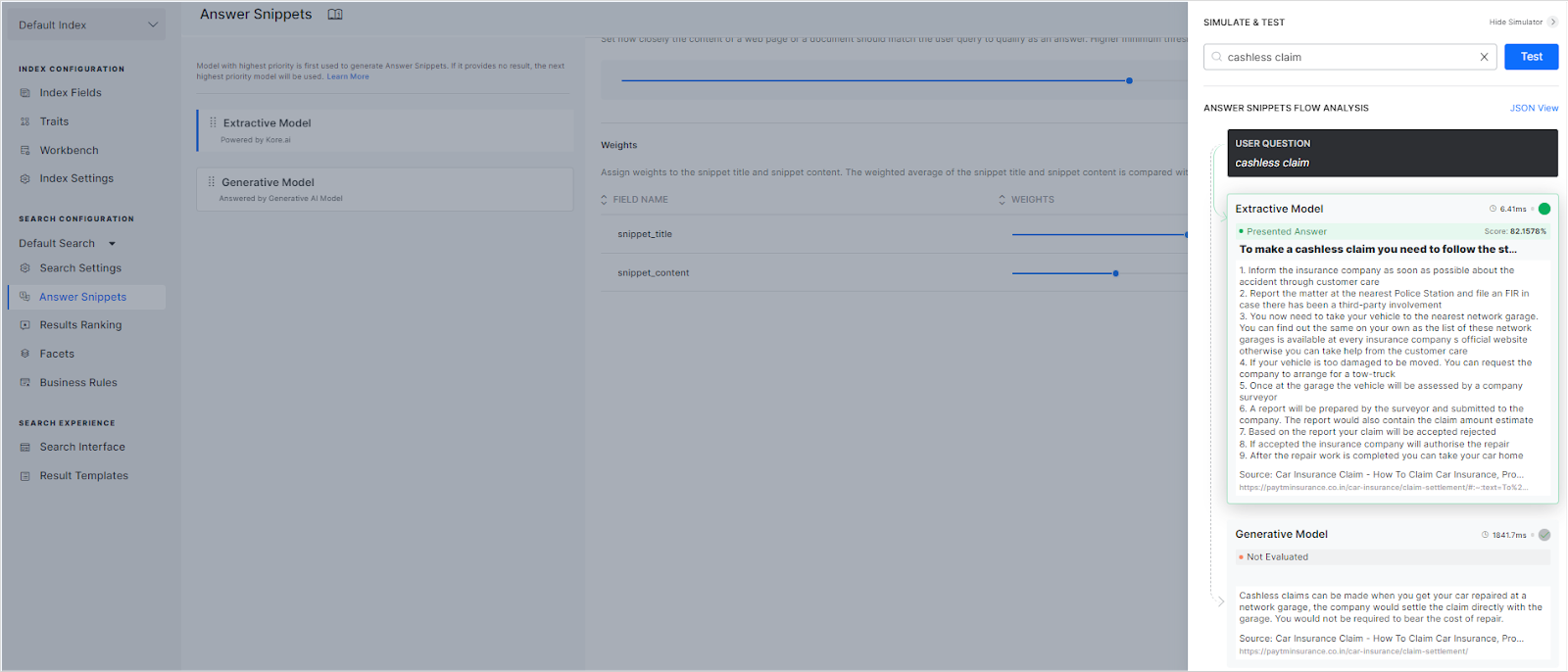

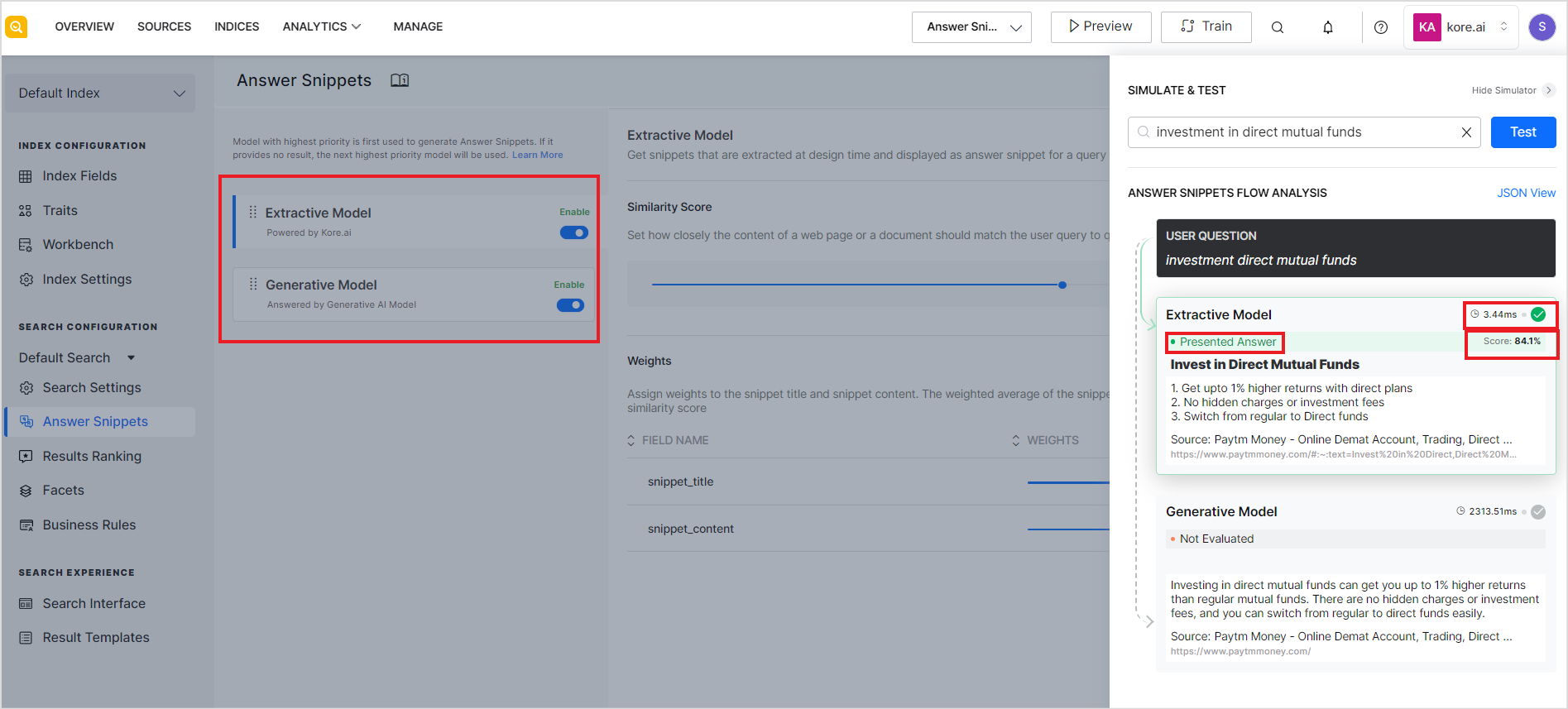

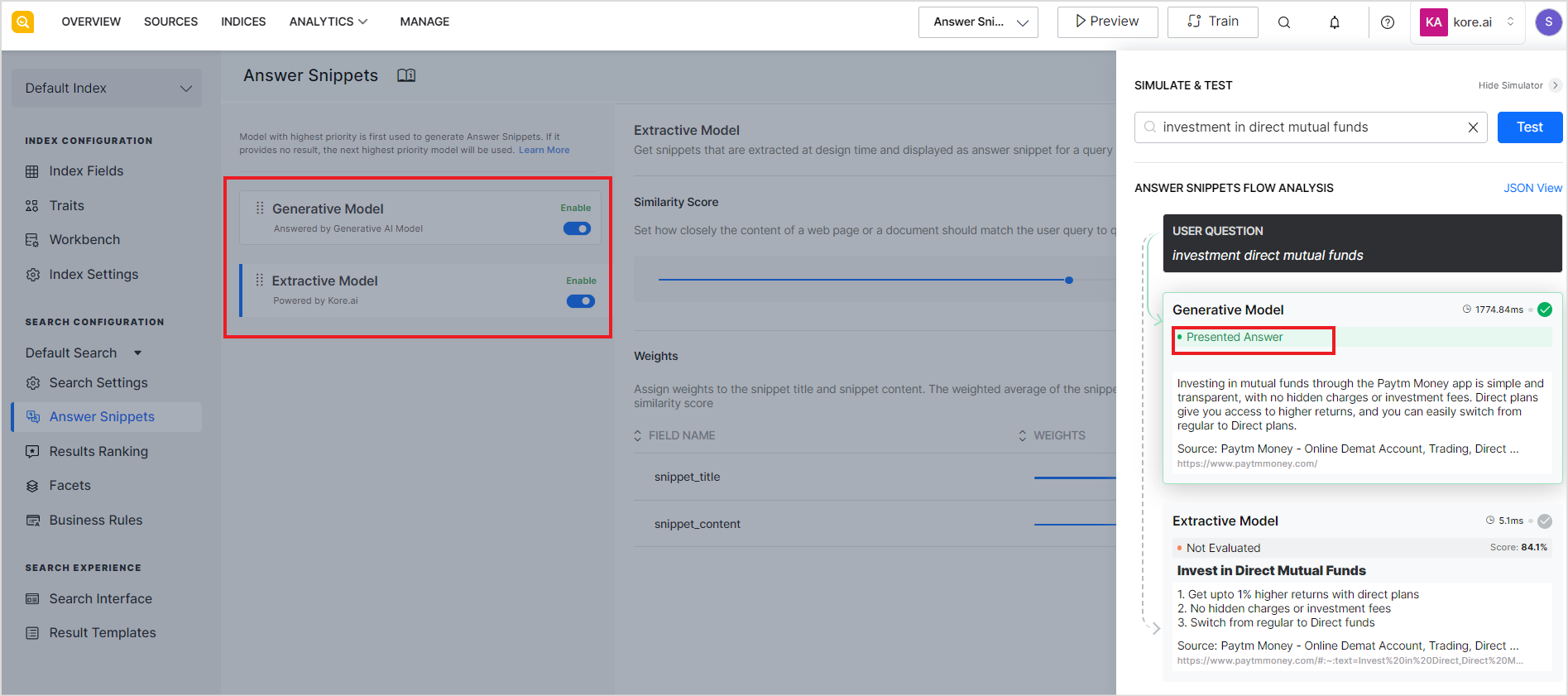

Enter a sample query and click Test. Depending on the model enabled for the answer snippet and the priority set, the simulator displays the results from the models as shown in the sample below.

The model marked as the Presented answer indicates the model that has been set to higher priority and hence will be displayed to the user on the search results page. The simulator also shows the time each model takes to find the snippets and the overall similarity score for the snippet in the case of the extractive model.

Response when the extractive model is set to a higher priority.

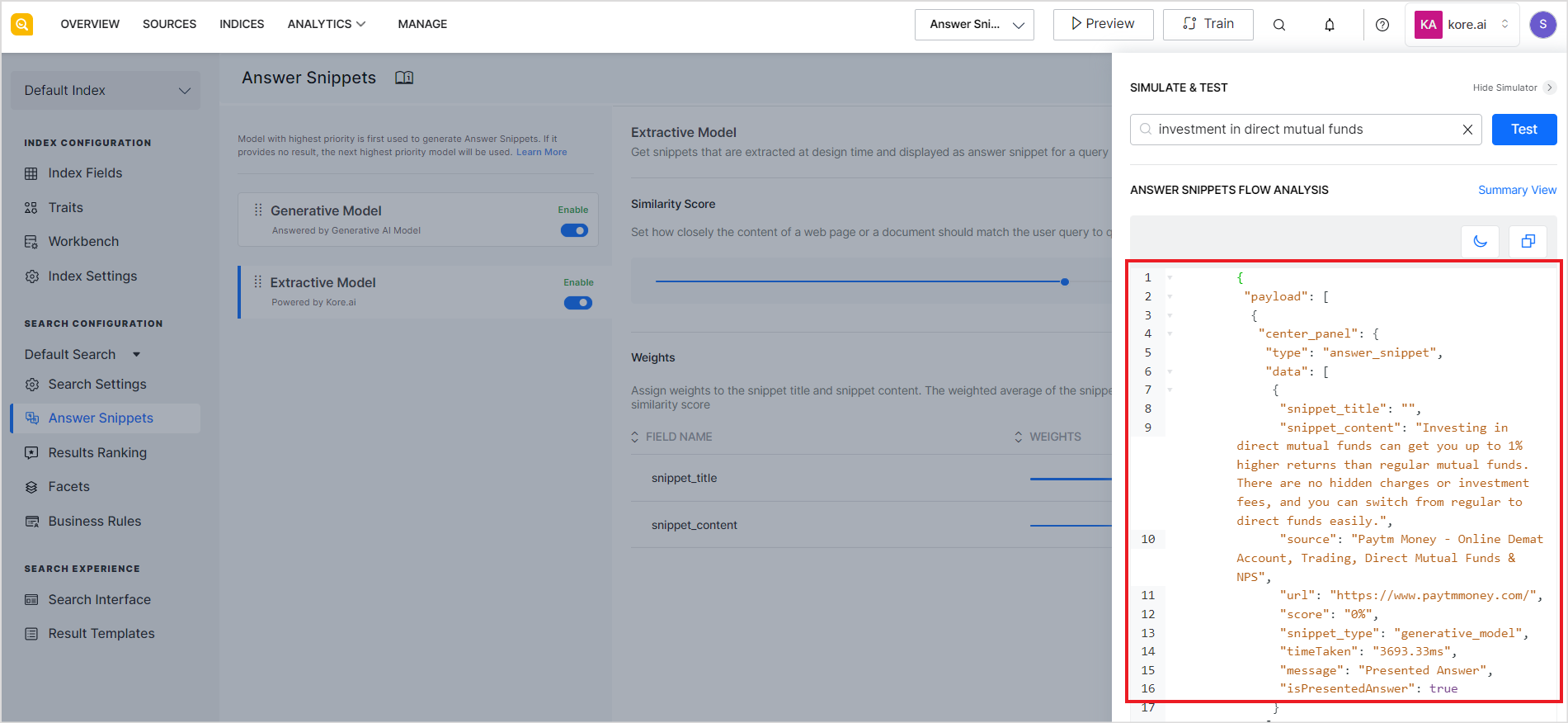

Response when the generative model is set to a higher priority.

The answer snippets flow analysis or simulation results are also displayed in a developer-friendly JSON format. This format provides additional information about the snippets and will be further enhanced in the future. Click JSON view to view the information in JSON format.