Troubleshooting Answer Snippets

This guide provides you with frequently seen issues and general guidelines on troubleshooting the Answers feature in a SearchAssist application when you do not receive any answer in response or receive an incorrect answer where the response does not match the expected answer.

Note that values for specific configurations discussed below may vary depending on the application’s requirements, the format of the ingested data, and other unique application characteristics. Understanding the application’s architecture and requirements is essential to ensure precise answers.

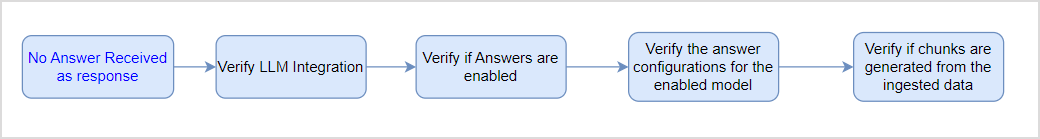

CASE 1: No Answers are received as a response

When you do not see any answers in the response, follow the steps listed below to troubleshoot the setup.

- Verify LLM Integration.

-

-

- Check for any connectivity issues or credential errors, ensuring that the necessary connections are properly established.

-

- Verify the Answer Models enabled

-

-

- Make sure that Answer Snippets are enabled in the application settings.

- Ensure that the configurations for the answer snippets for enabled models are set correctly.

-

- Verify chunks

-

-

- Use the chunk browser to check if chunks are generated from relevant ingested data. In case the chunks are not generated, train the application. When data is synced from connectors at regular intervals, or a web crawl is scheduled at regular intervals, there may be data that the application is not trained with. In such cases, training the application will create chunks from the latest data as well.

-

- Verify Prompt

-

- Ensure that the Prompt is correctly set and directs LLM to return the expected response.

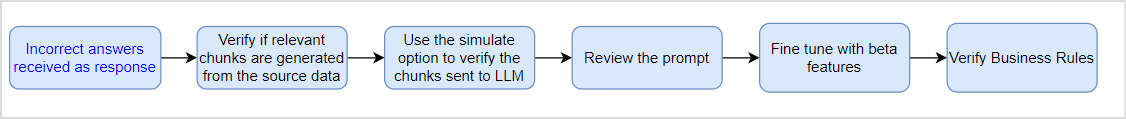

CASE 2: Incorrect Answer received as a response

When you receive answers in response to your queries but the answers are not as expected, follow the steps listed below to troubleshoot the issue.

- Verify Chunks

-

- Go to the Chunk Browser and examine the extracted content from the ingested data. Verify that the content is being accurately extracted and check if the relevant information you are searching for is included in the extracted content. In case the chunks from a specific source data are missing, train the application again.

- Next, use the Debug tool to investigate the chunks being sent to LLM. If no chunks are qualifying, consider adjusting the similarity threshold to include more relevant content and repeat the test query.

- Adjust Answer Model properties

-

- Verify the properties of the answer model that is enabled are set correctly, like the similarity score, weights, etc. Understand the config parameters and experiment with their values to get the desired response.

- Review the prompt for any possible errors or misunderstandings in interpretation, and try editing the prompt. Write clear and concise instructions in the prompt. Adhere to the rules of defining a prompt.

- Fine Tune Properties

-

- Use Beta features to experiment with chunk size and chunk overlap.

- Customize the response length using beta features to limit the number of tokens in the answer.

- Optimize embeddings by selecting vector fields and enabling vector search.

- Verify Business Rules

-

- Ensure that the business rules are modifying the results as expected. Business rules can modify outcomes by filtering, boosting, or hiding specific documents or chunks.