SearchAssist allows you to use generative AI to enrich and enhance data. You can use this stage to configure prompts that direct Generative AI in producing the desired output. Providing structured prompts to the AI model can yield precise results and align better with the tasks at hand.

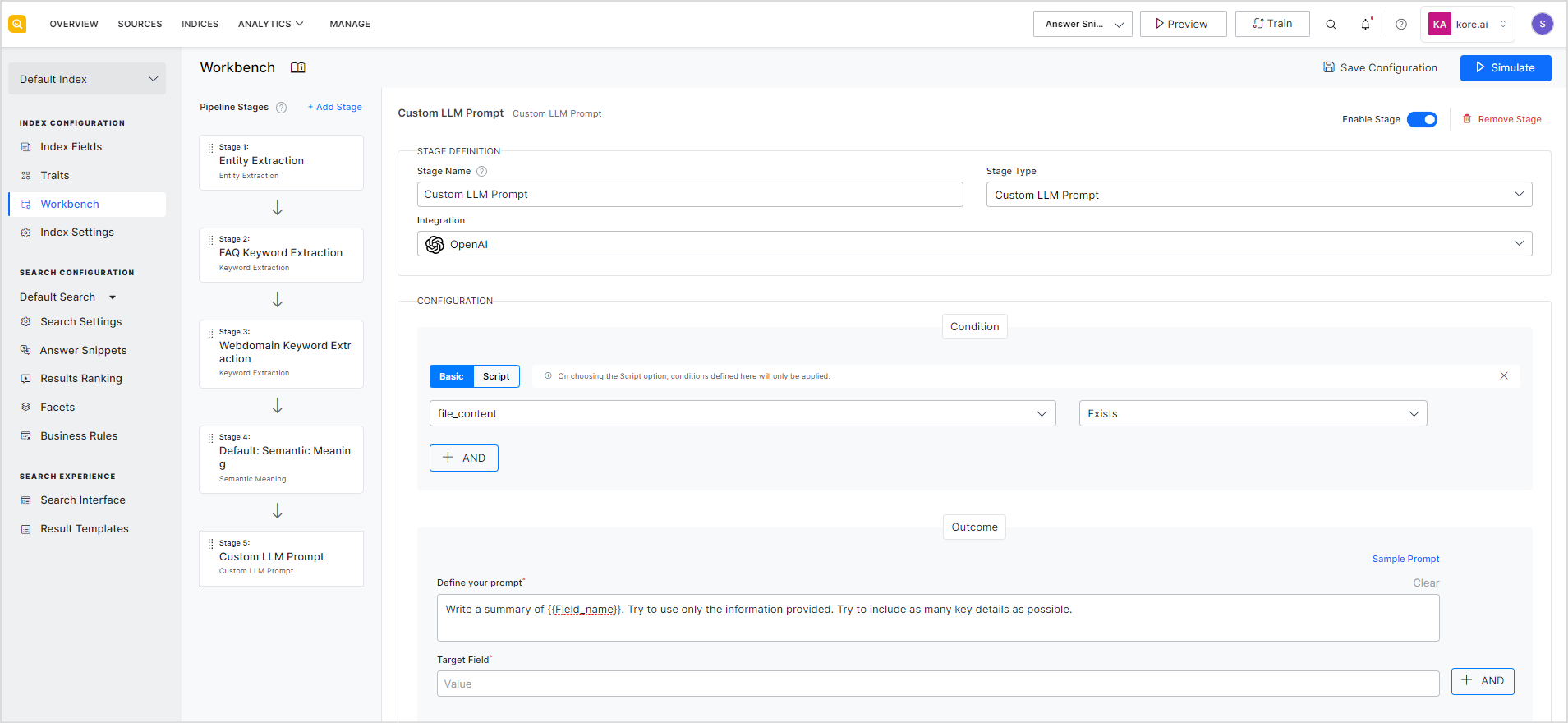

Use the following fields to configure the LLM Prompt stage.

Stage Name: Give an appropriate name to the stage for easy identification.

Stage Type: Set the stage type to Custom LLM Prompt.

Integration: This drop-down will list all the LLM integrations. Select the integration for which you want to define the prompts. If no LLM is integrated with the application, go to the Integrations page and configure an LLM engine with the application before defining this stage.

Configuration:

- Condition: Under the configuration, first define the condition to specify the data on which this processing is to be done. For example, if you want to create a summary field for only file type of content, you can set the field to sys_content_type and operator as equal to and value as file. This implies that the only files will undergo processing in this stage. You can define one or more conditions to select specific data.

- Outcome:As an outcome, define the prompt you want to use and the target field to store the outcome. Continuing the above example to create a summary field, define a prompt like “Write a summary of {{file_content}}. Try to use only the information provided.” and set the target field as ‘file_summary’. If the new field does not already exist, the application will add it as a new field.

You can choose prompts from the list of sample prompts provided by SearchAssist. Sample Prompts are designed for some of the frequently used generative AI tasks.

After making the changes, click the Save Configuration button. To test and verify the behavior of the stage, use the Simulate option. This will apply the configuration on the test files in the simulator and show the results as per the stage configuration. The simulator also shows errors, if there are any, in the configuration.