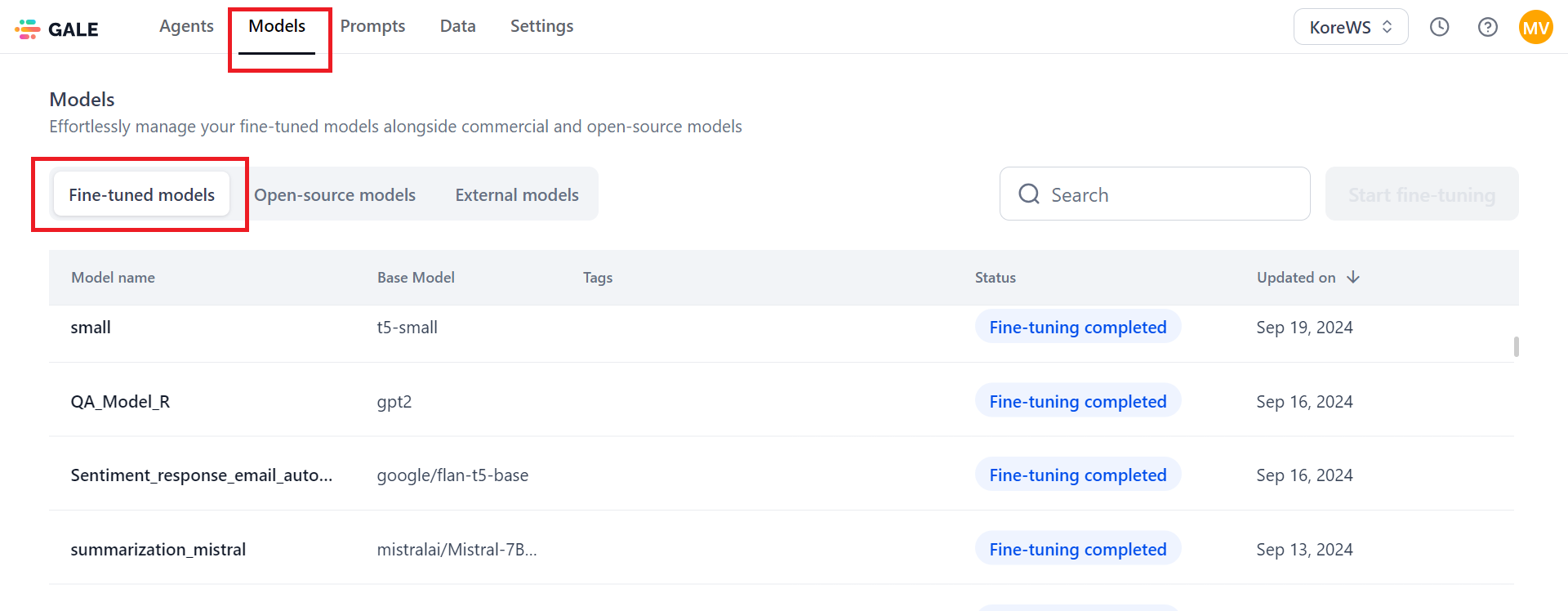

Deploy a fine-tuned model¶

Once the fine-tuning process is completed, you can deploy your fine-tuned model.

To deploy your fine-tuned model, follow these steps:

-

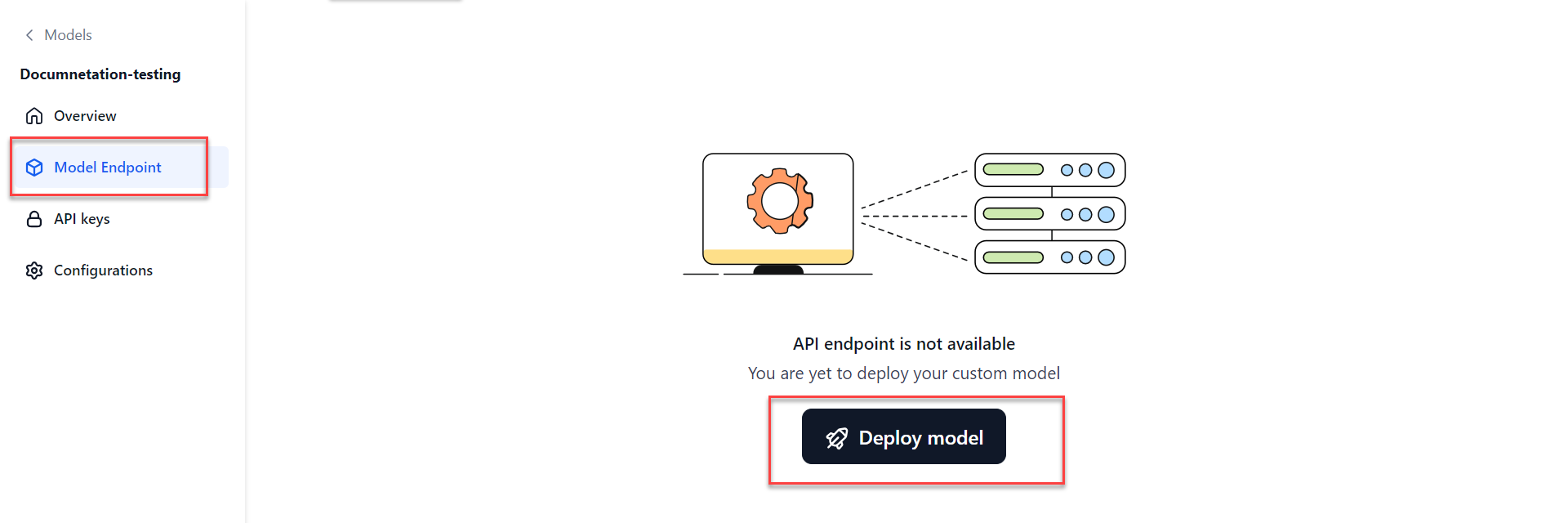

Do one of the following:

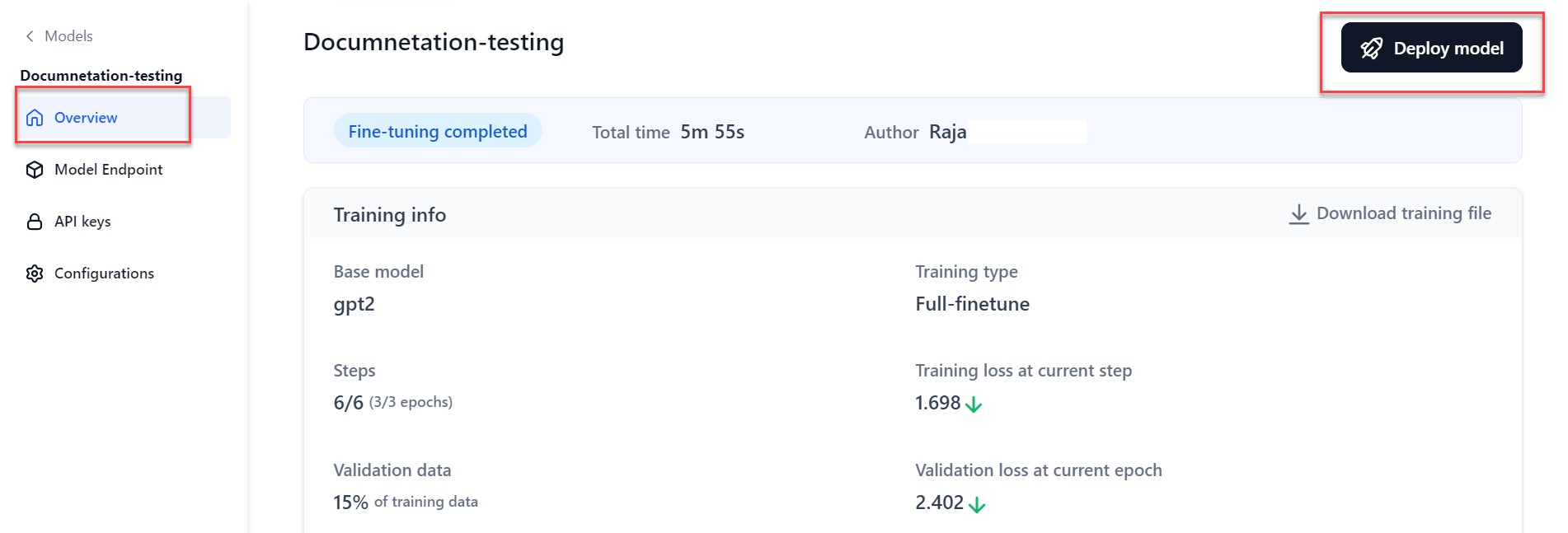

The model's Overview page is displayed. Click Deploy model at the top-right corner of the page.

Or

-

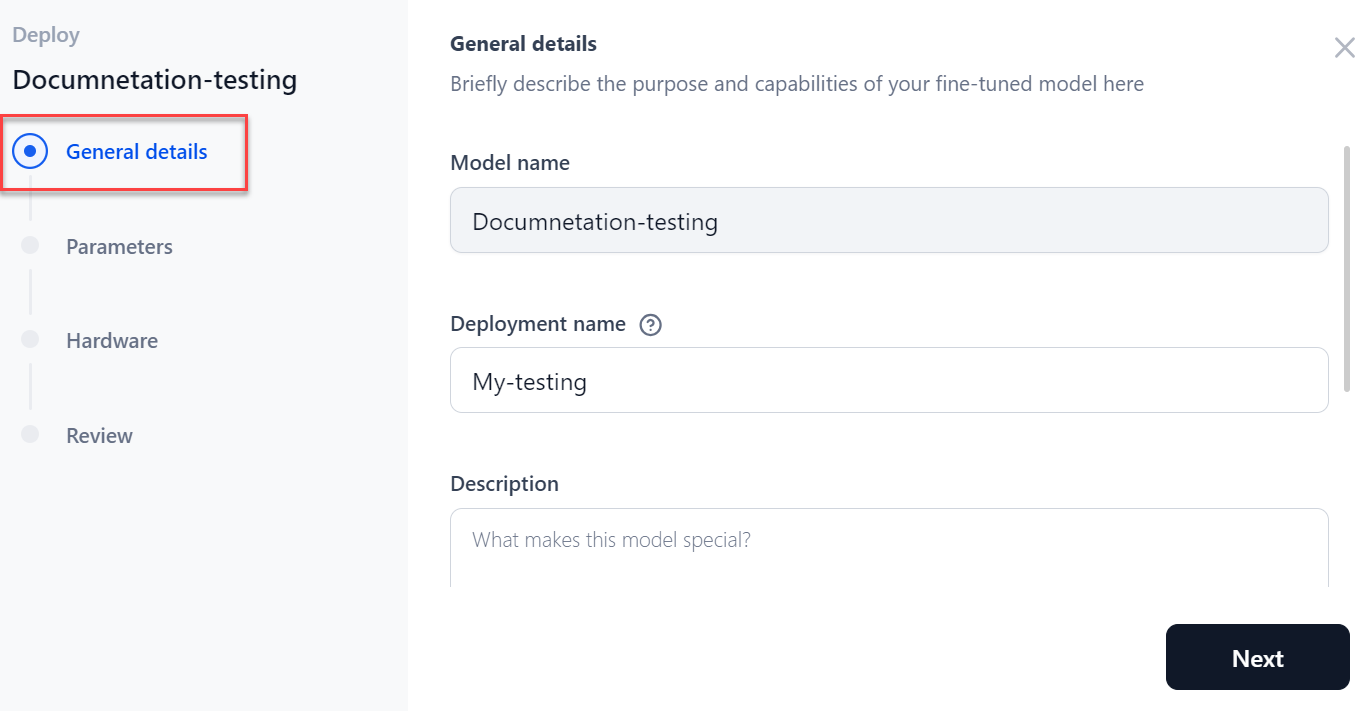

The Deploy dialog is displayed. In the General details section:

-

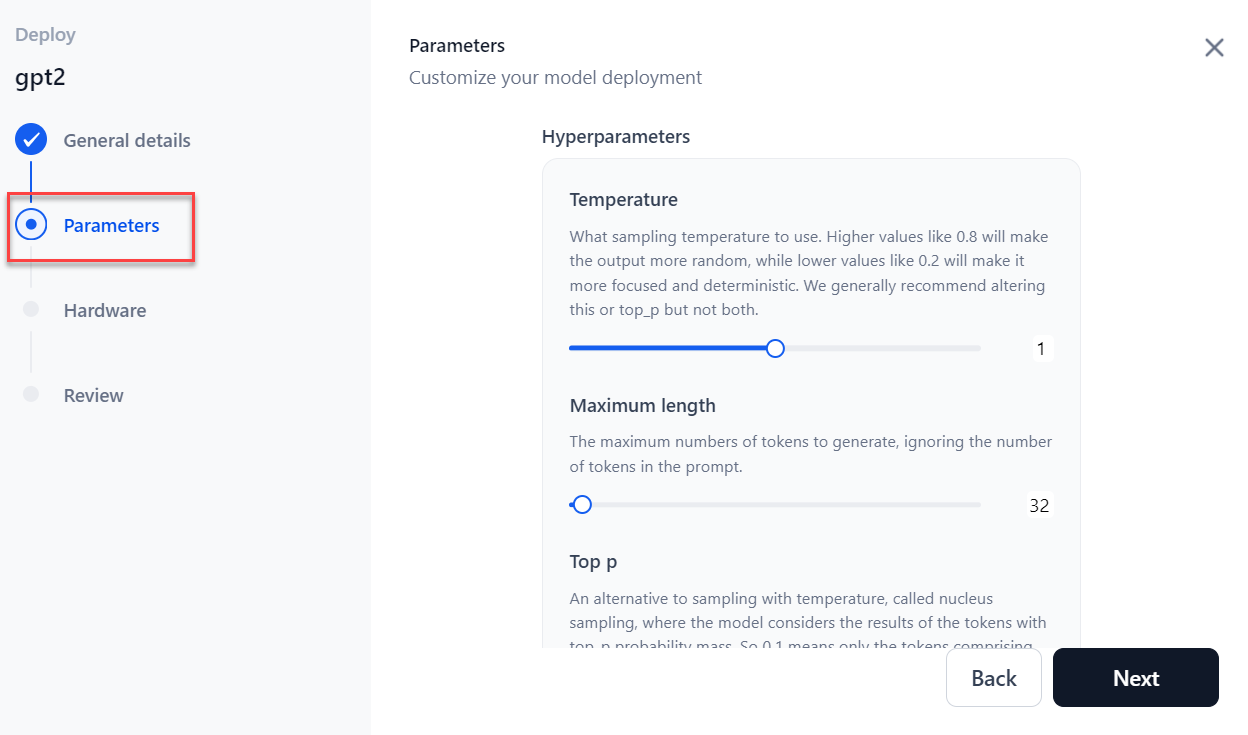

In the Parameters section:

-

Select the Sampling Temperature to use for deployment.

-

Select the Maximum length which implies the maximum number of tokens to generate.

-

Select the Top p which is an alternative to sampling with temperature where the model considers the results of the tokens with top_p probability mass.

-

Select the Top k value which is the number of highest probability vocabulary tokens to keep for top-k-filtering.

-

Enter the Stop sequences which implies that where the model will stop generating further tokens.

-

Enter the Inference batch size which is used to batch the concurrent requests at the time of model inferencing.

-

Select the Min replicas which is the minimum number of model replicas to be deployed.

-

Select the Max replicas which is the maximum number of model replicas to auto-scale.

-

Select the Scale up delay (in seconds) which is how long to wait before scaling-up replicas.

-

Select the Scale down replicas (in seconds) which is how long to wait before scaling down replicas.

-

-

Click Next.

-

Select the required Hardware for deployment from the dropdown menu and click Next.

-

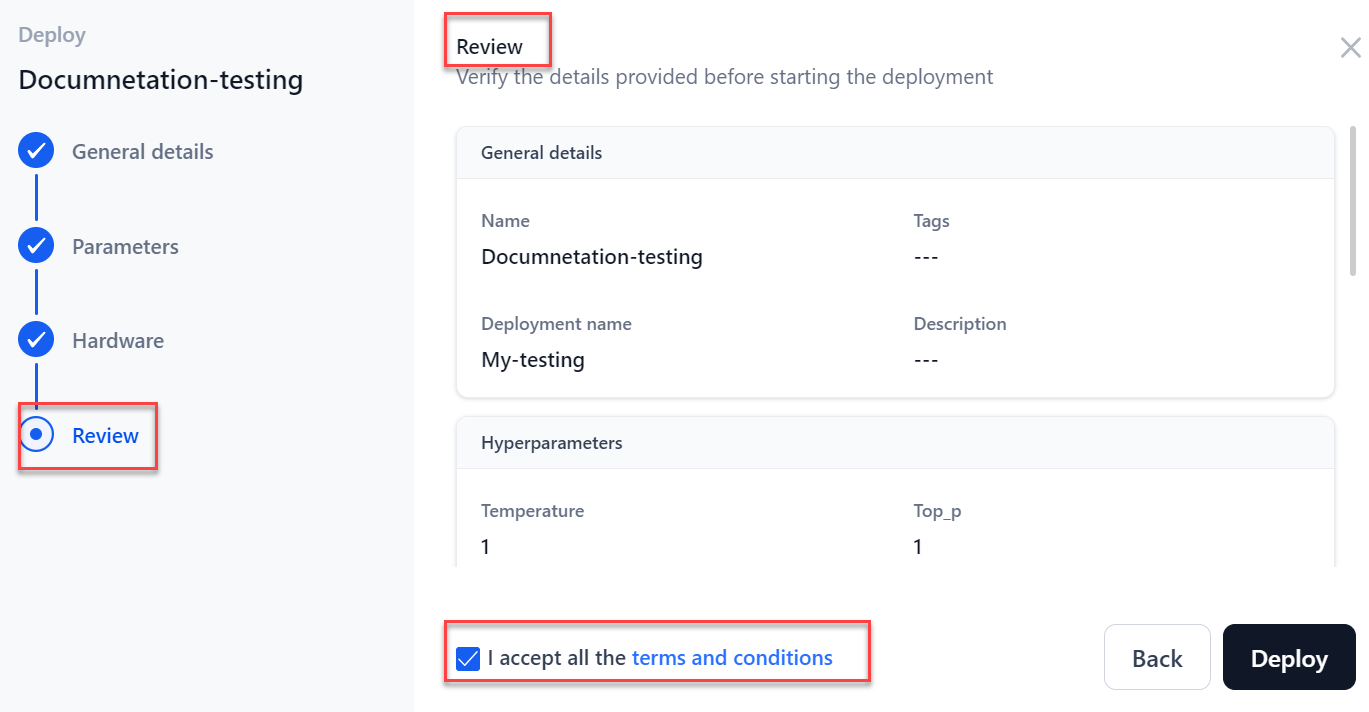

In the Review step, verify all the details that you provided earlier. Select the I accept all the terms and conditions check box.

Note

If you want to make any modifications, you can go to the previous step by clicking the Back button or a particular step indicator on the left panel.

-

Click Deploy.

After the deployment process is complete the status is changed to “Deployed”. You can now infer this model across GALE and externally. The deployment of your model will start and after the deployment process is complete, you can find the API endpoint created for your fine-tuned model.